Accessing Google Analytics 4 BigQuery data using Python

Google has made available a Google Analytics 4 dataset in its publicly accessible BigQuery resources. It is a very good starting point for exploring GA4 dataset in BigQuery. In this article , we will connect to BigQuery using Python and write a simple query to get data in python data frame.

- Enable BiqQuery API

- Create Service Account for API

- Download JSON file for authentication

- Connect using Python.

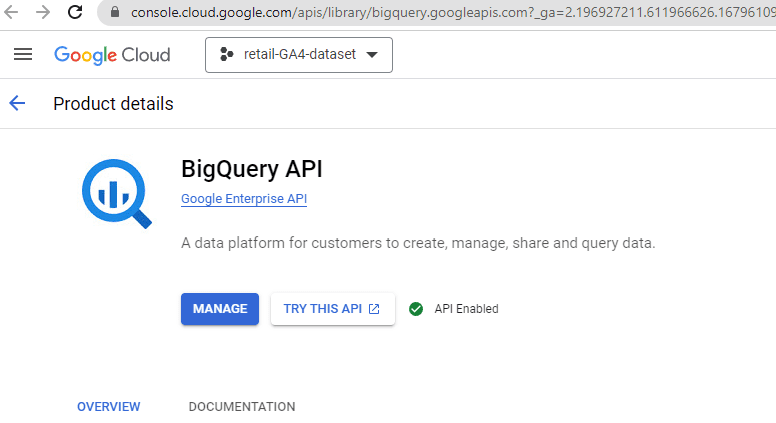

Enable BigQuery API

Create a Google Cloud account if you do not already have one. Then log in to Cloud Console. Once you are in the console you can follow this link to enable BigQuery API. Even if you have a free account, you can still enable BigQuery API and set up access. Once you have enabled the access, you will see API Enabled checked on the API screen.

Create Service Account for API

A service account is a special type of Google Cloud account that allows applications, like our Python script, to interact with Google Cloud services such as BigQuery. Service accounts are separate from user accounts and are associated with a specific project in Google Cloud. It is a best practice to use service accounts when working with Google Cloud services programmatically.

Service accounts authenticate using a private key associated with the account. This key is provided in the form of a JSON credentials file, which is securely generated and downloaded from the Google Cloud Console. By setting the GOOGLE_APPLICATION_CREDENTIALS environment variable to the path of this JSON file, our Python code can authenticate as the service account, establishing its identity with Google Cloud services.

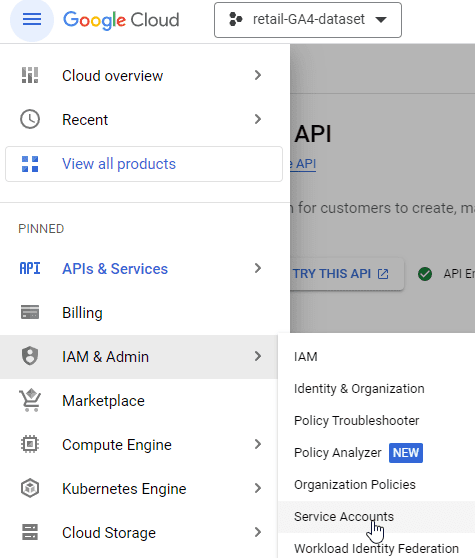

To create a service account using web interface, navigate to IAM &Admin > Service Accounts.

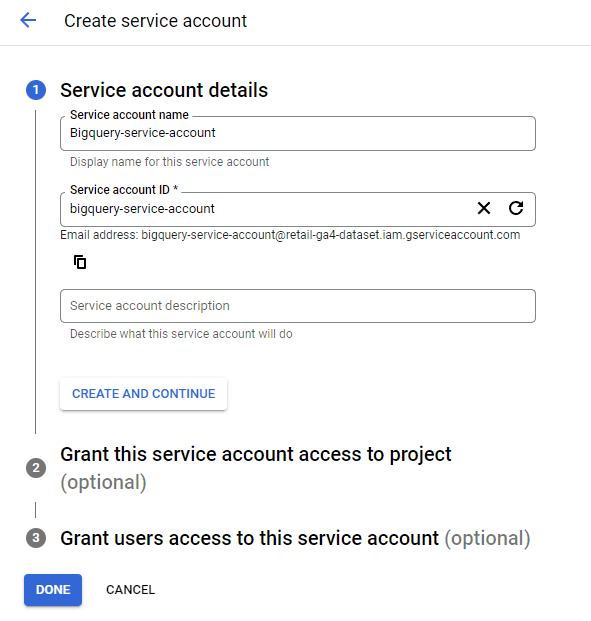

On the next screen click on New Service Account. Pick any name for your new service account in the screen that follows.

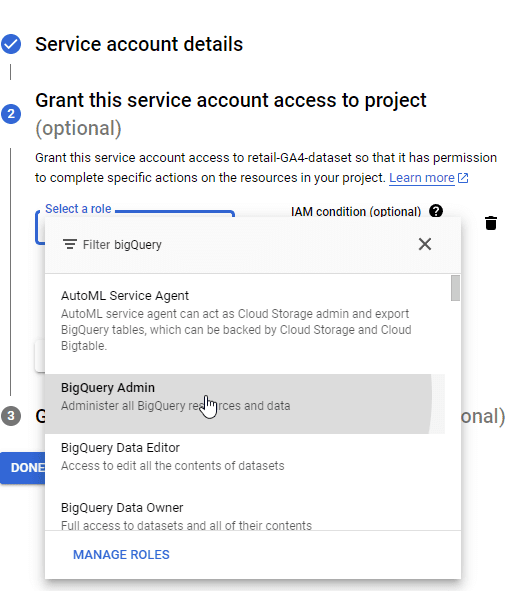

In the next step, grant service account access roles. Since our primary objective is accessing BigQuery data, we can go with BigQuery Admin default role.

Download JSON file for Authentication

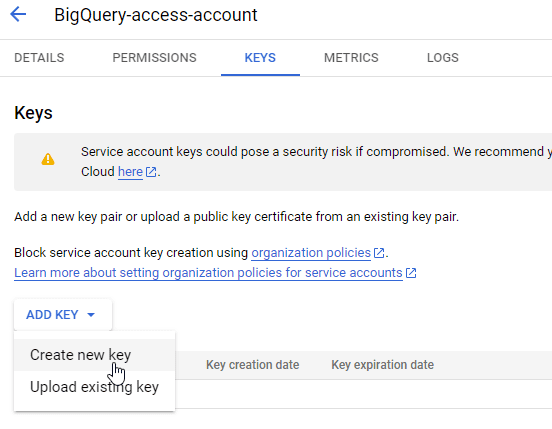

Now that we have created a Service Account, we can create a JSON authentication key file. Navigate to your newly created Service Account and click on Add Key.

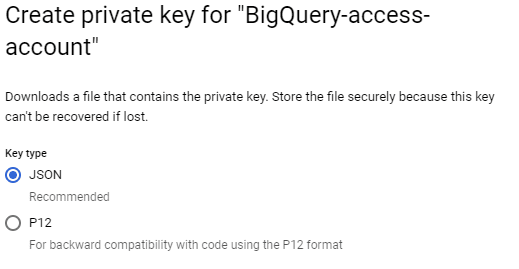

Select JSON on the next screen pop-up and continue.

This will automatically download JSON Service Account file to your computer.

Connect using Python

In the code below, we will use the JSON file created in the previous step to authenticate and then write and execute query using the steps below.

- Import necessary libraries to connect to google BigQuery and get Query results in pandas.

- Connect to Google BigQuery using JSON authentication file for Service Account and initialize a client connection to BigQuery.

- Set our project, dataset, and table prefix.

- Write SQL query and pass it to our project, dataset, and table prefix.

- Execute the query and store the result in a pandas DataFrame.

- Confirm the result by printing the DataFrame content.

# 1. Import necessary libraries to connect to google BigQuery and get Query results in pandas

import os

import pandas as pd

from google.cloud import bigquery

# 2.Connect to Google BigQuery using JSON authentication file for Service Account and initialize a client connection to BigQuery

# Set the path to the JSON credentials file

os.environ['GOOGLE_APPLICATION_CREDENTIALS'] = 'Auth/retail-ga4-dataset-76ffc0b63205.json'

# Initialize the BigQuery client

client = bigquery.Client()

# 3. Set our project, dataset, and table prefix

project_id = 'bigquery-public-data'

dataset_id = 'ga4_obfuscated_sample_ecommerce'

table_prefix = 'events_20210131'

# 4.Write SQL query and pass it our project, dataset and table prefix.

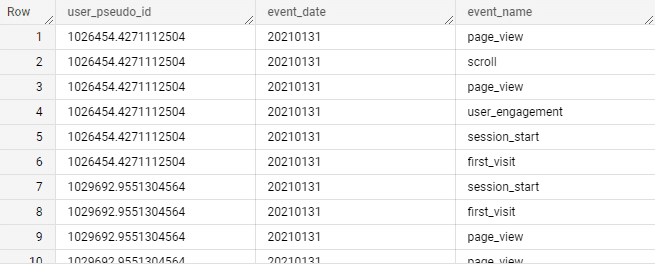

sql_query = f"""

SELECT

user_pseudo_id,

event_date,

event_name

FROM

`{project_id}.{dataset_id}.{table_prefix}`

LIMIT 50

"""

# 5.Execute the query and store the result in a pandas DataFrame

query_job = client.query(sql_query)

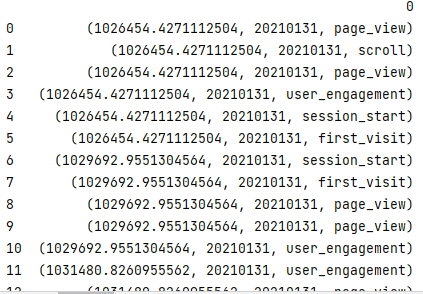

result = pd.DataFrame(list(query_job))

# Confirm the result by printing the DataFrame content

print(result)

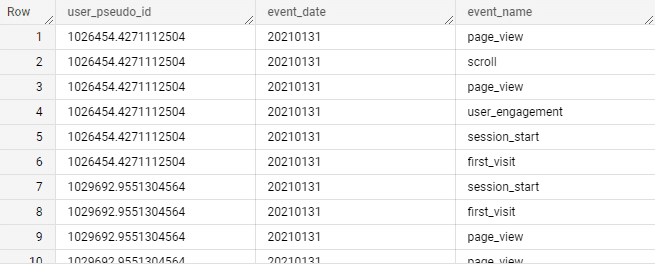

Query using BigQuery SQL

SELECT

user_pseudo_id,

event_date,

event_name

FROM `bigquery-public-data.ga4_obfuscated_sample_ecommerce.events_20210131` LIMIT 50

Query using Python

sql_query = f"""

SELECT

user_pseudo_id,

event_date,

event_name

FROM `{project_id}.{dataset_id}.{table_prefix}`LIMIT 50

"""

Summary

We explored freely available Google Analytics 4 publicly available dataset using python. To connect to BigQuery using python, we first enabled BigQuery API and then created a Service Account and obtained our JSON credentials file. We connected to BigQuery and then wrote a simple query to get a few fields from the tables. Finally, we compared the results of direct sql query in BigQuery interface and python query to confirm that we are extracting it accurately.

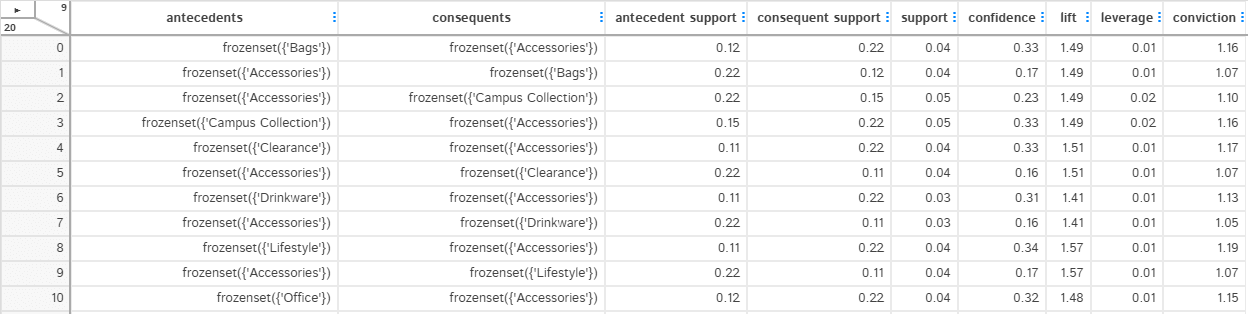

[…] are using Python to connect to BigQuery, I will recommend going through introductory article for getting data from BigQuery using Python. There is 12 months of data available, for this article, I am only getting first 6 months of data […]